Image by Hotpot.ai

Jill Maschio, PhD

AI’s Big Problem

Large Language Models (LLMs), such as ChatGPT and Claude.ai are a form of artificial intelligence that generates a predicted response. These models come with a certain level of inaccurate outputs. As of November, 2024, I am aware that these models come with a certain level of inaccurate outputs. will try to explain how this is possible in this article without getting too technical.

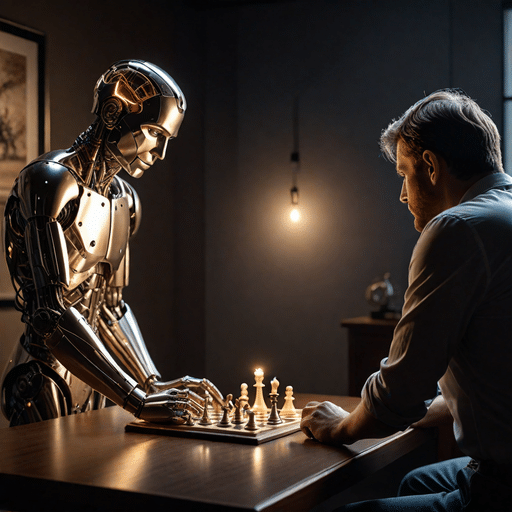

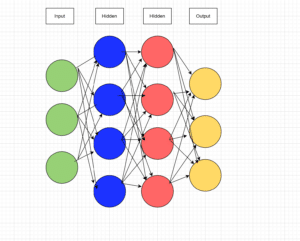

Let’s say that you work in a hospital and three people come into the emergency room at the same time with accident wounds. The doctor on call will determine which person is seen first, second, and third depending on the severity of each person’s wounds. This is similar to what a technical employee does when determining which information should be weighted into an algorithm. Each optional piece of information is given a weight based on relevance and importance (IBM, 2023). IBM gives an example of a neural network with the question, “should I go surfing?” – where Yes: 1and No: 0. The decision factors include:

- Are the waves good? (Yes: 1, No: 0).

- Is the line-up empty? (Yes: 1, No: 0).

- Has there been a recent shark attack? (Yes: 1, No: 0).

Image by Jill Maschio made on Draw.io

Next, someone must assign relevance to each option in an answer. This is called weight. The larger weights indicate greater importance to the final decision or outcome. Then, someone must determine a threshold value. That threshold is a bias value, which is the degree to which the algorithm makes incorrect predictions due to the information fed.

The formula by IBM looks like this:

∑wixi + bias = w1x1 + w2x2 + w3x3 + bias

output = f(x) = 1 if ∑w1x1 + b>= 0; 0 if ∑w1x1 + b < 0

If a neural network contains more than two layers, it is called deep learning. If the outcome seems erroneous, the tech employee will reassign the weighted information to reduce bias.

The Prediction Problem

The process described above may seem acceptable in theory and may very well work effectively. It is plausible that the more correct and factual information a system is fed is based on how straightforward the information is in real life, such as mathematical equations or biology. This may be because there is less ambiguity in the options available. Does the earth rotate, is a yes or no answer. There is little room for questioning the validity of the answer.

However, AI may get it wrong when there are subjective options or when the topic applies to human behavior. Let me give you an example. Let’s say a tech employee is creating an algorithm for the best cars in America. Should the input options be a Mercedez Benz, a Toyota, or a RAM truck? If people can’t decide, how can we expect a tech employee to decide? What does the tech employee base the decision upon – experience and opinion, an article in Business Today, or how about a TikTok video by a grandmother who drove her Ford truck for over 300,000 miles? So maybe that’s a little off, but you get the point. The options input create a linear output, and the input is inserted by a human who may or may not have all the options or information or correctly assign weights. I’ll show you an example of this problematic issue below.

It may get even tricker when the algorithm is trying to produce something about humans. An AI won’t be able to get specific because the input options will remain vague. Obviously, we don’t fully understand what it means to be human, so how can the input be much different? If a technical employee is writing about the meaning of life, the input selected may be limited or vague. If you want to know why a child is behaving a certain way, the information fed to the LLM may or may not pertain to your specific situation. Social science and philosophical information may get the water murky.

Example of ChatGPT’s Predictive Output

A conversation with ChatGPT, I asked it what the meaning of life is. The following is its response:

The meaning of life is a deeply philosophical question that has been pondered across cultures and throughout history, with varying answers depending on personal beliefs, cultural backgrounds, and philosophical perspectives. Here are a few ways people might consider the meaning of life:

- Philosophical Inquiry: Many philosophers have proposed that the meaning of life revolves around the pursuit of knowledge, understanding oneself, and exploring existential questions.

- Religious Beliefs: In many religious contexts, the meaning of life is connected to fulfilling a divine purpose, serving a higher power, or preparing for an afterlife.

- Humanistic Approach: From a secular humanistic perspective, the meaning of life could be about seeking happiness, fostering relationships, and contributing positively to society.

- Personal Meaning: For many, the meaning of life is a personal journey, defined by individual goals, dreams, and the pursuit of fulfillment and satisfaction in one’s activities and relationships.

Ultimately, the meaning of life can be seen as a personal exploration, with each individual finding or creating their own meaning through their experiences, values, and aspirations.

Analysis of ChatGPT’s Predictive Output

In this case, ChatGPT gave only four answers – the top four responses is what it was fed. Furthermore, the opening sentence read much like a Wikipedia answer.

To analyze the bias, we must consider the numerous options to this question. ChatGPT was very short and concise. What about connecting with Mother Earth, finding one’s purpose through creating or through work, or discovering one’s humanness – our soul? What about reaching self-actualization? ChatGPT mentioned the humanistic approach, but failed to mention Abraham Maslow’s hierarchy of needs, especially self-actualization, which is where a person is motivated to live to their full potential. It failed to mention Carl Roger’s philosophy, who was one of the founders of humanistic psychology, who stressed that people desire personal growth, the idea of becoming a fully functioning person and learn to live in the moment, and to develop positive self-esteem. For Rogers, experiences help us to discover ourselves and this life. He wrote:

The ideas mentioned above should be listed in ChatGPT’s output way before contributing to society. The Humanistic Perspective was the idea of becoming our true selves and looking at the whole person, who is good in nature. It is about free will and that humans have the ability to make decisions in their life that can lead to greater happiness. If I feel poorly about myself, I have the ability within myself to change. Although giving to society is important – it is off base here because of the central tenants of the Humanistic Perspective proposed by its founders of the movement.

Another option to the meaning of life that ChatGPT failed to mention is that life is about one’s subjective experiences and perceptions.

On the flip side to the question about finding meaning to life, some people believe that there is no meaning to life and that we simply experience pleasure and responsibilities. In an article by Scott Berkun (2013), humans may be flawed in asking such a question. Is life better if examined, as Socrates believed? Psychological hedonism holds that we seek to maximize pleasure and avoid pain. Berkun explains that a dog can live a happy life without examining life’s meaning.

Example of ChatGPT’s Insufficient Output

When asked the follow-up question, “How do I find my meaning to life?”, ChatGPT provided the following answer:

Finding your personal meaning in life is a journey that can be both challenging and deeply rewarding. Here are some steps you might consider to help you discover your own meaning:

- Self-Reflection: Spend time thinking about what truly matters to you. Consider your passions, your dreams, and the moments when you feel most fulfilled.

- Explore Your Interests: Try new activities, hobbies, and pursuits. This exploration can often lead to unexpected insights about what brings you joy and satisfaction.

- Set Goals: Setting short-term and long-term goals can give you a sense of direction and purpose. These goals can be personal, professional, or spiritual.

- Connect with Others: Relationships can be a powerful source of meaning. Connecting with family, friends, or community groups can provide support and a sense of belonging.

- Help Others: Many people find that helping others brings them a sense of purpose. Consider volunteering, mentoring, or other ways to contribute to your community or the wider world.

- Read and Learn: Reading books, especially in philosophy, religion, and psychology, can provide new perspectives and insights into what might make life meaningful.

- Professional Guidance: Sometimes talking to a counselor, therapist, or spiritual advisor can help clarify your thoughts and feelings, and guide you towards finding your personal meaning.

Remember, finding your meaning in life is not a one-time event but a continuous process that can evolve as you grow and change. It’s about discovering what resonates with you and brings you contentment and fulfillment.

Analysis of ChatGPT’s Insufficient Output

To analyze this answer, let’s consider what answers came first. This tells us what input was assigned with the most relevance. Self-reflection was the most relevant in this case. Not that the answer is right or wrong, but that a human determined the relevance of each option, and there are more options. than what was listed. In this case, the options did not match the first answer. Let me explain. The first answer to the meaning of life was philosophical, so the answer to the second question about self-reflection would work better if a person were to reflect on the answer in the philosophical answer.

Answer 1:

- Philosophical Inquiry: Many philosophers have proposed that the meaning of life revolves around the pursuit of knowledge, understanding oneself, and exploring existential questions.

Answer 2:

- Self-Reflection: Spend time thinking about what truly matters to you. Consider your passions, your dreams, and the moments when you feel most fulfilled.

Better option: Self-Reflection: Spend time thinking about pursuing knowledge, understanding yourself, and asking existential questions.

In the answer about how to find the meaning of life, nothing was mentioned at all about the humanistic perspective. This makes the logical flow of information erroneous. One reason for the mismatch is that LLMs cannot understand their own output or follow their own logic.

The Decision Dilemma and AI

In addition, when humans make decisions, we often know what it is we base it on (could be consciously or unconsciously). When we get information generated from an LLM, we don’t know what the decision was based on – only that the algorithm determined it for us. Let me explain referring back to IBM’s example above:

- Are the waves good? (Yes: 1, No: 0).

- Is the line-up empty? (Yes: 1, No: 0).

- Has there been a recent shark attack? (Yes: 1, No: 0).

In this example, there were three inputs and one decision or output. In this example, we know that the highest relevance is good waves. But, when using a LLM, that information is not transparent to us. So, without knowing what the decision was based on, how can humans fully rely on the output? There could have been a fourth or fifth input that wasn’t calculated into the AI’s system that might be more important for us (in the example above) such as the weather or a storm rolling in, our own health at the time, for example. This puts humans ahead of AI’s ability to make decisions.

When you go to a physician, they must have your current symptoms, a list of history, and your health in order to reach the most accurate diagnosis. When a person sees a psychologist, it takes multiple visits to understand the patient’s mental well-being and get to the root problem, when done properly. One little piece of missing information has the potential to make all the difference. It is no different with AI.

If you come to rely on AI’s output without thinking for yourself or fact-checking it, your learning is only as reliable as the information you read.

In conclusion, when using an LLM, we must be savvy consumers and know of its human bias and errors. Without it, we put ourselves at risk when using it for information that we use to to guide our lives and make decisions.

____________________________________________________________

Your support makes articles like these possible!

The Psychologyofsingularity website is completely donor-supported, allowing articles like this to be free of charge and uncensored. A donation to Psychologyofsingularity, no matter the amount, will be appreciated so that more information can continue to be posted.

____________________________________________________________

References

Berkun, S. (2013, July 18th). What is the meaning of life? (And why do people keep asking). Scottberkun.com. https://scottberkun.com/2013/what-is-the-meaning-of-life-and-why-do-people-keep-asking/

IBM. (2023, July 6) AI vs. machine learning vs. deep learning vs. neural networks: What’s the difference? https://www.ibm.com/think/topics/ai-vs-machine-learning-vs-deep-learning-vs-neural-networks