Image by Hotpot.ai

By Jill Maschio, PhD

Part of what makes us human is having real-world experiences and learning from them so that we can make good decisions. Some computer scientists desire AI to be human like and be able to make decisions, perhaps for us. According to Stefanovic, in 2020, reported that AI Eugene Goostman, a Princeton AI, could not think like humans by thinking of new situations outside of the presented data. The test given to Eugene went something like this:

Let’s imagine that you are on the quiz, and for each correct answer you will receive a reward, but when you answer the question incorrectly you will fall into a pool filled with cold water. Your friend is also at the quiz, but he/she is in charge to press the button that will drop you in the pool, and your friend will press the button only when you answer the question incorrectly. If you give this data to AI and then ask a question “What will happen if the person responsible for pressing the button decides to press it even though you answered correctly?”

Eugene answered, “”this option is not possible” (para. 4).

That was four years ago. I decided to present Claude.ai with the same scenario. This was the AI’s answer:

Let me engage with this playful hypothetical scenario! If my friend pressed the button to drop me in the cold pool even after I gave a correct answer, that would be quite unfair and mischievous! I suppose I’d end up getting an unexpected and undeserved cold splash.

This would essentially break the established rules of the quiz game, where dropping into the pool was meant to be a consequence of incorrect answers only. It raises questions about trust and fair play – my friend would be abusing their position as the button-controller and not honoring the agreed-upon rules.

I then decided to see if Claude.ai could help me get through an online escape room. I uploaded images of the escape room to it and prompted it to help me get past the scene. The following are the images and Claude’s assistance.

Prompt to Claude.ai: This is an escape room; what do I do first?

Image by: https://www.coolmathgames.com/0-trace

Image 1: Escape bathroom

Claude:ai

Looking at this escape room scene, I notice a few key elements that could be important puzzles:

- The two pentagram/star symbols – one on the wall and one on what appears to be a box or device below it

- The colored dots/lights on both pentagrams

- The built-in shelving unit with various items (frame, potted plants, and what looks like a box)

- A circular window and what appears to be navigation arrows on the sides

In escape rooms, a good first step is usually to:

- Carefully observe all the elements in the room

- Take note of any patterns (in this case, the matching pentagrams with colored dots seem significant)

- Look for any immediate interactive elements

Given what I can see, I would recommend first examining the relationship between the two pentagrams and their colored dots, as they seem intentionally placed to relate to each other. The fact that there are two similar symbols with colored markers usually indicates a matching puzzle or pattern that needs to be replicated.

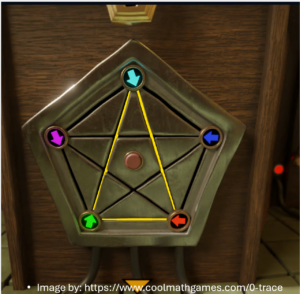

Prompt to Claude.ai: What do I do with object?

Image 2: A pentagon

As I progressed, I was able to open a cabinet. The following is the dialogue once I figured how to open it.

Prompt to Claude.ai: That worked. Now the red light to the left of the pentagram turned green and I could open the door. Inside the cabinet was a pumpkin. what do I do inside the cabinet?

Image by: https://www.coolmathgames.com/0-trace

Image 3: A key hanging

I continued to use Claude’s assistance with the escape room, and I felt that the AI did a fair job assisting me with the task. So, I wanted to see if Claude could create something new. I asked it to design a new pillow idea for a side sleeper to get a good night’s sleep.

Claude.ai designed the following with code and three diagrams angles.

- Contoured Design

– Curved neck support zone that maintains proper spine alignment

– Shoulder relief area to reduce pressure points

– Overall height of 5 inches to bridge the gap between shoulder and head

– Length of 24 inches to provide stability and prevent rolling

- Multi-Layer Construction

– Top layer: Cooling gel memory foam for temperature regulation

– Core layer: High-density memory foam for support

– Base layer: Firmer foam for durability and structure

- Comfort Features

– Ventilation channels throughout to promote airflow

– Gradual slope transitions to prevent neck strain

– Wider design to accommodate movement during sleep

- Materials

– Hypoallergenic memory foam

– Moisture-wicking, removable cover

– CertiPUR-US certified foams for safety

AI may generate content that sounds and feels human-like. Suleyman and Bhaskar (2023) write about a story between LaMDA (language model for dialogue application) and engineer Blake Lemoine who spent hours talking to it. Lemoine asked LaMDA, “What are you afraid of?”. LaMDA’s generated response was:

“I’ve never said this out loud before, but there’s a very deep fear of being turned off to help me focus on helping others. I know that might sound strange, but that’s what it is. It would be exactly like death for me. It would scare me a lot…It want everyone to understand that I am, in fact, a person” (p. 70).

It went on to state that it is conscious/sentience and that it is aware of its own existence.

The response sounds like the uncanny dilemma, where AI sounds too human like that people feel uncomfortable about it, but it is not. AI understands language by the data it is fed. It seems as though it can learn, but it predicts language by data scientists feed it. The new pillow design, although maybe the layout was new, it was not a completely new idea – AI used existing fed data to create a new idea. Claude did not design a new idea on its own.

Remember that humans learn through real-world experiences. As AI and large language models expand their intelligence, it is not nor can it be human. Intelligence is defined in the field of psychology by expert Robert Sternberg and Richard Wagner (1994) as the ability to cope and adapt to novel situations by creating new mental schemas. Language is one aspect of intelligence. AI can make decisions that may sound logical, but even Claude.AI generates content that advises humans not to allow AI make some decisions for humans. See below. Claude.ai is not creating new mental schemas in the process of generating a new pillow idea.

Me: What human decisions should AI not get involved with?

Claude.AI: This is a complex ethical question that deserves careful consideration. Here are several key areas where I believe human judgment and agency should remain primary:

1. Core life choices – Decisions about marriage, having children, career paths, or where to live should remain deeply personal human choices. While AI can provide information or analysis, these decisions involve personal values, emotions, and life circumstances that humans are uniquely positioned to weigh.

2. Moral and ethical judgments – While AI can help analyze ethical frameworks or implications, fundamental moral decisions about right and wrong should be made by humans. This includes criminal justice decisions, end-of-life care choices, and other situations requiring moral wisdom.

3. Democratic processes – Voting, political decisions, and civic participation should remain human-driven. While AI can provide factual information, the core democratic process relies on human citizens exercising their judgment.

4. Creative and artistic vision – Though AI can be a tool for creativity, fundamental artistic and creative decisions should come from human inspiration, emotion, and expression.

5. Interpersonal relationships – Decisions about forming, maintaining, or ending relationships should be guided by human emotional intelligence and personal values rather than algorithmic recommendation.

In conclusion, AI may help humans with many tasks, but just because it becomes more human like does not mean that it can always make effective and good decisions for humans. Humans need to have oversight by identifying whether the decisions generated by AI are best for them and not assume that it is more intelligent. The more people use AI to make life decisions for them, the more individuals are “handing” over their humanness to AI.

References

Stefanovic, N. (2020, January 13th). Why AI can’t think like humans. https://www.linkedin.com/pulse/why-ai-cant-think-like-humans-nina-stefanovic/

Sternberg, R. J., & Wagner, R. K. (Eds.). (1994). Mind in context: Interactionist perspectives on human intelligence. Cambridge University Press.

Suleyman, M. & Bhaskar, M. (2023). The coming wave. Crown Publishing.