Image by Hotpot

Author: Jill Maschio, PhD

In a 2023 CNN article by Kelly, it was noted that Sam Altman warned the world in 2015 that AI could create a disaster attack on humans and that he has prepped for such a scenario. Fast forward to today, January 7, 2025, and he is calling an urgent message for educators to be swift and innovative to use and help students leverage AI (Forbes, 2025). In the Forbes article, Altman is noted for saying that we have much to learn about AI and reminds us that there are ethical challenges that AGI will pose. I am curious as to why, a few years back, Altman warned society that AI could kill us all and is now insisting that the field of education have immediate enlightenment because AGI will be here soon.

There are close to 4 million public and private educators in the US alone, and there is no direction on using AI in education. A bigger pressing problem in education is that we have a backlog, so to speak, because educational institutions aren’t taught how to teach students the skills to be AI-literate for the workforce. We do not fully understand how AI will change the workforce and what that will entail. Until we have programs that integrate such knowledge, we can’t graduate AI-skill-ready students across programs and industries.

Aside from a lack of application guidance, there are other factors to consider when incorporating AI into society and education, with ethical issues perhaps the most obvious. Less obvious are limited studies showing that AI is effective in the classroom as a tool that leads to actual learning. Where is the empirical evidence? Let’s put aside memorization or help being creative and look at actual knowledge gained. I recently wrote a scholarly journal questioning the reliability of the published study. The study claimed that it is “well-established” that AI can be used to promote critical-thinking skills in students, which was a misrepresentation of the literature. In their book AI Snake Oil, Narayanan and Kapoor (2024) have done the same thing. They called into question the reliability of studies about AI. They reported that journals appear more concerned with hyping up AI rather than publishing the truth. According to the authors, AI systems have not received peer-reviewed research on reliability in the classroom and across arenas in society. I have looked into this question and located a few studies evaluating AI’s performance. The limited studies showed that AI predicting models are not as effective as we need and have limitations, especially in the medical field (Høj, et al., 2024; Patrick, 2019).

AI may be effective at some tasks, but according to Narayanan and Kapoor (2024), AI’s impediment due to errors and biases in predicting human behavior is a significant concern. Other limitations of AI include its lack of reasoning abilities; it does not know if the information it provides is factual. AI systems have harmed humans and caused psychological distress. Take, for example, the two cases of people who committed suicide, which is thought that AI influenced the people to take their lives. I encourage readers to visit incidentdatabase.ai and read reports of AI incidents.

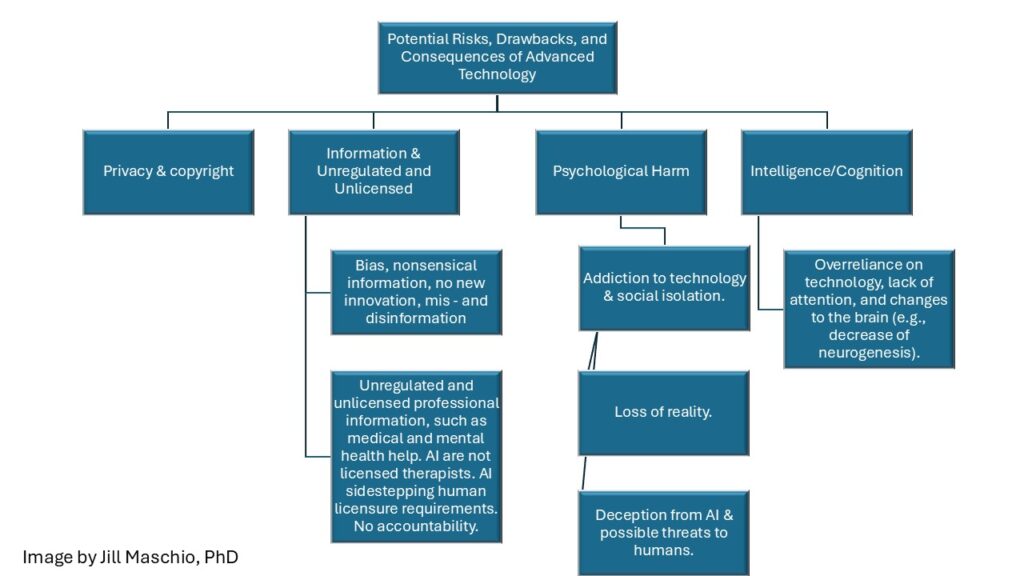

I see the potential risks and consequences falling into these categories: privacy and copyright issues, information and unregulated and unlicensed information, psychological harm, and intelligence and cognition.

Chart of Potential Risks and Consequences

I do not know why a rush to usher AI into the classroom exists. I know that our government is telling us to trust AI (See President Biden Administration’s AI Policy). One thing I was taught in school is to question everything. Our job in the field of education is to educate, not indoctrinate. I know we do not live in a post-human society just yet, and not everyone is on board with the transhumanist movement. I hope that Mr. Altman will be more forthcoming. Again, why the rush? Shouldn’t we slow down, as Dunnigan et al. (2023) suggest in their article? Can we just Please slow it all Down? School Leaders Take on ChatGPT. Kapoor and Narayanan (2024) tell the story of Epic Hospital, which deployed an AI system to help the hospital with patients with sepsis – a fatal infection. Several hospitals used the AI, but there was no independent study on its effectiveness until a few years later when it was shown that the AI system was slightly better at predicting patients with sepsis than tossing a coin. There are not sufficient studies suggesting that AI is a proven system that will improve academic performance, scores, and humanity’s intelligence. Until then, let’s slow down and take it one day at a time.

Your support makes articles like these possible!

The Psychology of Singularity website is completely donor-supported, allowing articles like this to be free of charge and uncensored. I would appreciate a donation, no matter the amount so that more information can continue to be posted.

References

Fitzpatrick, D. (2025, January 7th). AGI is coming in 2025. Schools urgently need a strategy. Forbes. https://www.forbes.com/sites/danfitzpatrick/2025/01/07/agi-is-coming-in-2025-schools-urgently-need-a-strategy/

Høj, S., Thomsen, S. F., Ulrik, C. S., Meteran, H., Sigsgaard, T., & Meteran, H. (2024). Evaluating the scientific reliability of ChatGPT as a source of information on asthma. The Journal of Allergy and Clinical Immunology. Global, 3(4), 100330. https://doi.org/10.1016/j.jacig.2024.100330

Narayanan, A., & Kapoor, S. (2024). AI snake oil. Princeton University.

Kelly, S. (2023, October 31st). Sam Altman warns that AI could kill us all. Nevertheless, he still wants the world to use it. CNN Business. https://www.cnn.com/2023/10/31/tech/sam-altman-ai-risk-taker/index.html#:~:text=Despite%20his%20leadership%20status%2C%20Altman,%E2%80%9CA.I.%20that%20attacks%20us.%E2%80%9D

Patrick, J. (2019). How to check the reliability of artificial intelligence solutions-ensuring client expectations are met. Appl Clin Inform,10(2):269-271. doi: 10.1055/s-0039-1685220